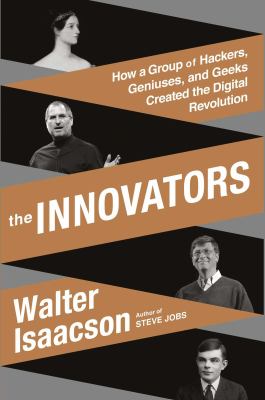

The Innovators

Author: Walter Isaacson

Release: October 7, 2014

Tagline: How a Group of Hackers, Geniuses, and Geeks Created the Digital Revolution

Publisher: Simon & Schuster

Genre: History, Computer Science, Non-Fiction, Technology

ISBN-10: 147670869X

ISBN-13: 978-1476708690

Synopsis: From Ada Lovelace to Vannevar Bush, Alan Turing, John von Neumann, J.C.R. Licklider, Doug Engelbart, Robert Noyce, Bill Gates, Steve Wozniak, Steve Jobs, Tim Berners-Lee, and Larry Page, Walter Isaacson explores the personalities that created the digital revolution.

Declassified by Agent Palmer: Innovation Meets Invention: A Review of The Innovators by Walter Isaacson

Quotes and Lines

Math “constitutes the language through which alone we can adequately express the great facts of the natural world,” she said, and it allows us to portray the “changes of mutual relationship” that unfold in creation. It is “the instrument through which the weak mind of man can most effectually read his Creator’s works.”

“I do not believe that my father was (or ever could have been) such a Poet as I shall be an Analyst; for with me the two go together indissolubly,” she wrote. (Ada)

Thus did Ada, Countess of Lovelace, help sow the seeds for a digital age that would blossom a hundred years later.

Sometimes innovation is a matter of timing. A big idea comes along at just the moment when the technology exists to implement it.

There was a lonely intensity to him, reflected in his love of long-distance running and biking. (Of Alan Turing)

…Claude Shannon, who that year turned in the most influential master’s thesis of all time, a paper that Scientific American, later dubbed “the Magna Carta of the Information Age.”

“The desire to economize time and mental effort in arithmetical computations, and to eliminate human liability to error is probably as old as the science of arithmetic itself,” his memo began. – Howard Aiken

“A physicist is one who’s concerned with the truth,” he later said. “An engineer is one who’s concerned with getting the job done.” – J. Presper Eckert

“Life is made up of a whole concentration of trivial matters,” he once said. “Certainly a computer is nothing but a huge concentration of trivial matters.” – J. Presper Eckert

An invention, especially one as complex as the computer, usually comes not from an individual brainstorm but from a collaboratively woven tapestry of creativity.

Innovation requires articulation.

Most of us have been involved in group brainstorming sessions that produced creative ideas. Even a few days later, there may be different recollections of who suggested what first, and we realize that the formation of ideas was shaped more by the iterative interplay within the group than by an individual tossing in a wholly original concept. The sparks come from ideas rubbing against each other rather than as bolts out of the blue.

When the human player of the Turing Test uses words, he associates those words with real-world meanings, emotions, experiences, sensations, and perceptions. Machines don’t. Without such connections, language is just a game divorced of meaning.

The invention of computers did not immediately launch a revolution. Because they relied on large, expensive, fragile vacuum tubes that consumed a lot of power, the first computers were costly behemoths that only corporations, research universities, and the military could afford. Instead the true birth of the digital age, the era in which electronic devices became embedded in every aspect of our lives, occurred in Murray Hill, New Jersey, shortly after lunchtime on Tuesday, December 16, 1947. That day two scientists at Bell Labs succeeded in putting together a tiny contraption they had concocted from some strips of gold foil, a chip of semiconducting material, and a bent paper clip. When wiggled just right, it could amplify an electric current and switch it on and off. The transistor, as the device was soon named, became to the digital age what the steam engine was to the Industrial Revolution.

It came from the partnership of a theorist and an experimentalist working side by side, in a symbiotic relationship, bouncing theories and results back and forth in real time. IT also came from embedding them in an environment where they could walk down a long corridor and bump into experts who could manipulate the impurities in germanium, or be in a study group populated by people who understood the quantum-mechanical explanations of surface states, or sit in a cafeteria with engineers who knew all the tricks for transmitting phone signals over long distances.

In addition to being a good engineer, he was a clever wordsmith who wrote science fiction under the pseudonym J. J. Coupling. Among his many quips were “Nature abhors a vacuum tube” and “After growing wildly for years, the field of computing appears to be reaching its infancy.”

Sometimes the difference between geniuses and jerks hinges on whether their ideas turn out to be right.

Moore and his colleagues had recently seen The Caine Mutiny, and they started plotting against their own Captain Queeg.

One of his key investment maxims was to be primarily on the people rather than the idea. (Arthur Rock)

Grove’s mantra was “Success breeds complacency. Complacency breeds failure. Only the paranoid survive.”

In 1971 the region got a new moniker. Don Hoefler, a columnist for the weekly trade paper Electronic News, began writing a series of columns entitled “Silicon Valley USA,” and the name stuck.

“We at TMRC use the term ‘hacker’ only in its original meaning, someone who applies ingenuity to create a clever result, called a ‘hack,’” the club proclaimed.

Spacewar highlighted three aspects of the hacker culture that became themes of the digital age.

(Collaboration, open-source, interactive)

Innovation can be sparked by engineering talent, but it must be combined with business skills to set the world afire.

At its core were certain principles: authority should be questioned, hierarchies should be circumvented, nonconformity should be admired, and creativity should be nurtured.

“I am proud of the way we were able to engineer Pong, but I’m even more proud of the way I figured out and financially engineered the business,” he said. “Engineering the game was easy. Growing the company without money was hard.” – Nolan Bushnell

“Basic research leads to new knowledge,” Bush wrote. “It provides scientific capital. It creates the fund from which the practical applications of knowledge must be drawn.” – Vannevar Bush

“Advances in science when put to practical use mean more jobs, higher wages, shorter hours, more abundant crops, more leisure for recreation, for study, for learning how to live without the deadening drudgery which has been the burden of the common man for past ages.” – Vannevar Bush

“Many people suppose that computing machines are replacements for intelligence and have cut down the need for original thought,” Wiener wrote. “This is not the case.” (Professor Norbert Wiener)

*The government has repeatedly changed whether there should be a “D” for “Defense” in the acronym. The agency was created in 1958 as ARPA. It was renamed DARPA in 1972, then reverted to ARPA in 1993, and then became DARPA again in 1996.

Paul Baran, who did deserve to be known as the father of packet switching, came forward to say that “the Internet is really the work of a thousand people,” and he pointedly declared that most people involved did not assert claims of credit. “It’s just this one little case that seems to be an aberration,” he added, referring disparagingly to Kleinrock.

The Kleinrock controversy is interesting because it shows that most of the Internet’s creators preferred–to use the metaphor of the Internet itself–a system of fully distributed credit. They instinctively isolated and routed around any node that tried to claim more significance than the others. The Internet was born of an ethos of creative collaboration and distributed decision making, and its founders liked to protect that heritage. It became ingrained in their personalities–and in the DNA of the Internet itself.

“The culture of open processes was essential in enabling the Internet to grow and evolve as spectacularly as it has,” Crocker said later.

It was thus that in the second half of 1969–amid the static of Woodstock, Chappaquiddick,. Vietnam War protests, Charles Manson, the Chicago Eight trial, and Altamont–the culmination was reached for three historic enterprises, each in the making for almost a decade. NASA was able to send a man to the moon. Engineers in Silicon Valley were able to devise a way to put a programmable computer on a chip called a microprocessor. And ARPA created a network that could connect distant computers. Only the first of these (perhaps the least historically significant of them?) made headlines.

All the world’s a net! And all the data in it merely packets

come to store-and-foreward in the queues a while and then are

heard no more. ‘Tis a network waiting to be switched!

To switch or not to switch? That is the question:

Whether ‘tis wiser in the net to suffer

The store and forward of stochastic networks,

Or to raise up circuits against a sea of packets,

And by dedication serve them?

Innovation is not a loner’s endeavor, and the Internet was a prime example. “With computer networks, the loneliness of research is supplanted by the richness of shared research,” proclaimed the first issue of ARPANET News, the new network’s official newsletter.

J. C. R. Licklider and Robert Taylor, “The Computer as a Communication Device,” Science and Technology, Apr. 1968.

By the 1980s the LSD evangelist Timothy Leary would update his famous mantra “Turn on, tune in, drop out” to proclaim instead “Turn on, boot up, jack in.”

“Computers did more than politics did to change society.”

Brand wrote on the first page of the first edition, “A realm of intimate, personal power is developing–power of the individual to conduct his own education, find his own inspiration, shape his own environment, and share his adventure with whoever is interested. Tools that aid this process are sought and promoted by the Whole Earth Catalog.”

PARC’s creed: “The best way to predict the future is to invent it.”

…Gates was the prime example of the innovator’s personality. “An innovator is probably a fanatic, somebody who loves what they do, works day and night, may ignore normal things to some degree and therefore be viewed as a bit imbalanced,” he said. “Certainly in my teens and 20s, I fit that model.” He would work, as he had at Harvard, in bursts that could last up to thirty-six hours, and then curl up on the floor of his office and fall asleep. Said Allen, “He lived in binary states: either bursting with nervous energy on his dozen Cokes a day, or dead to the world.”

“In fact, our tagline in our ad had been ‘We set the standard,’” Gates recalled with a laugh. “But when we did in fact set the standard, our antitrust lawyer told us to get rid of that. It’s one of those slogans you can only use when it’s not true.”

Once again, the greatest innovation would come not from the people who created the breakthrough but from the people who applied them usefully.

Stallman’s free software movement was imperfectly named. Its goal was not to insist that all software come free of charge but that it be liberated from any restrictions. “When we call software ‘free,’ we mean that it respects the users’ essential freedoms: the freedom to run it, to study and change it, and to redistribute copies with or without changes,” he repeatedly had to explain. “This is a matter of freedom, not price, so think of ‘free speech,’ not ‘free beer.’

For Stallman, the free software movement was not merely a way to develop peer-produced software; it was a moral imperative for making good society. The principles that it promoted were, he said, “essential not just for the individual users’ sake, but for society as a whole because they promote social solidarity–that is, sharing and cooperation.”

Like car engines, computers eventually became harder to take apart and put back together.

“Money is not the greatest of motivators,” Torvalds said. “Folks do their best work when they are driven by passion. When they are having fun. This is as true for playwrights and sculptors and entrepreneurs as it is for software engineers.”

Sometimes innovation involves recovering what has been lost.

At the official launch of the service (The Source) in July 1979 at Manhattan’s Plaza Hotel, the sci-fi writer and pitchman Isaac Asimov proclaimed, “This is the beginning of the Information Age!”

A newsgroup sprang up named alt.aol-sucks, where old-timers posted their diatribes. The AOL interlopers, read one, “couldn’t get a clue if the stood in a clue field in clue mating season, dressed as a clue, and drenched with clue pheromones.”

Berners-Lee was born in 1955, the same year as Bill Gates and Steve Jobs, and he considered it a lucky time to be interested in electronics.

“Once you’ve made something with wire and nails, when someone says a chip or a circuit has a relay you feel confident using it because you know you could make one,” he said. “Now kids get a MacBook and regard it as an appliance. They treat it like a refrigerator and expect it to be filled with good things, but they don’t know how it works. They don’t fully understand what I knew, and my parents knew, which was what you could do with a computer was limited only by your imagination.” – Tim Berners-Lee

After all, the whole point of the Web, and the essence of its design, was to promote sharing and collaboration.

“When we tell stories on the Internet, we claim computers for communication and community over crass commercialism.” – Justin Hall

*In March 2003 blog as both a noun and a verb was admitted into the Oxford English Dictionary.

Winograd had studied artificial intelligence but, after reflecting on the essence of human cognition, changed his focus, as Engelbart had, to how machines could augment and amplify (rather than replicate and replace) human thinking.

This merger of the personal computer and the Internet allowed digital creativity, content sharing, community formation, and social networking to blossom on a mass scale. It made real what Ada called “poetical science,” in which creativity and technology were the warp and woof, like a tapestry from Jacquard’s loom.

“People don’t invent things on the Internet. They simply expand on an idea that already exists.” – Ev Williams

Innovation is most vibrant in the realms where open-source systems compete with proprietary ones.